Where to Buy or Rent GPUs for LLM Inference: The 2026 GPU Procurement Guide

Authors

Last Updated

Share

For teams working to self-host LLMs, inference at scale isn’t just about having powerful models for your own use case. It’s also about supporting those models with the right hardware.

That means getting the right GPU, in the right region, at the right price, and at the right time.

Make the wrong choices early on, and you could end up with underutilized resources, unexpected operational costs, or delayed deployments. This is especially common when buying or renting GPUs for on-prem LLM deployment. It requires higher upfront costs and longer lead times, and flexibility is limited compared to cloud-based options.

In this guide, we will cover:

- How to choose the best GPUs for your LLM inference needs

- Popular GPU sourcing options and their pros and cons

- Why you should run GPUs across multiple regions, clouds and providers

- How our Bento Inference Platform helps you manage and optimize heterogeneous GPU environments

Key factors for choosing GPUs#

Before we look at where to source GPUs, it’s important to understand what actually drives your GPU choice.

GPU memory#

GPU memory (VRAM) determines how large a model you can serve and how long a context window you can support.

During inference, each new token stores intermediate results in memory (KV cache) so the LLM doesn’t have to recompute previous tokens. This caching mechanism drastically improves speed, but it also consumes a significant amount of memory.

The longer the context, the larger the KV cache grows. Since GPU memory is limited, the KV cache often becomes the bottleneck for running LLMs with long contexts.

If you don’t have enough VRAM, you might need to split the model across multiple GPUs using tensor parallelism or offload the KV cache elsewhere.

In short, VRAM = headroom. It gives you flexibility for longer prompts, more requests, and larger models without having to re-architect your setup.

When choosing GPUs, estimate how much memory your model actually needs. Add a buffer for KV cache growth and runtime overhead.

Performance#

Performance determines how quickly your model can generate responses and how efficiently you’re using your GPUs.

There are many factors that can impact performance. Before buying or renting GPUs, focus on two key metrics:

- Memory bandwidth: Refers to the maximum rate at which data can be transferred between the GPU's processing cores and its memory. Higher bandwidth means the model can load weights and process tokens faster.

- Compute throughput: FLOPS (floating-point operations per second) is a useful spec to understand the theoretical compute power of a GPU. For inference, however, what users care about more is tokens per second.

Don’t rely solely on marketing numbers. Always benchmark your GPU to understand real-world performance and efficiency. I recommend llm-optimizer, an open-source tool that helps you benchmark and optimize any open LLMs on different GPUs.

Cost and availability#

When sourcing GPUs, two practical questions always come:

How much will it cost, and can you actually get one when you need it?

Here’s what you need to know:

- Stronger performance often means higher cost. For example, on Google Cloud (us-central1), an NVIDIA L4 costs about $515.99/month, while an H100 can exceed $8,000/month.

- GPU prices vary widely by region and provider. High-end GPUs like H100 and H200 are both scarce and expensive, and some providers only offer them in select regions.

- You’ll see different pricing models. Most providers offer both on-demand rates and discounted long-term commitments for reserved capacity.

- You don’t need high-performance and costly GPUs for every task. Consider your price-performance ratio before making any decision.

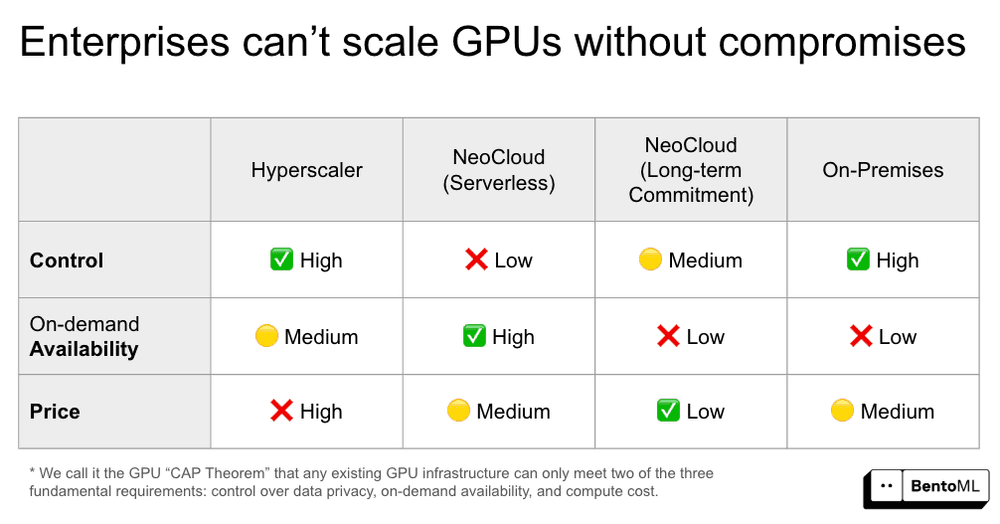

For enterprise AI teams, a bigger challenge is what we call the GPU CAP Theorem: A GPU infrastructure cannot guarantee Control, on-demand Availability, and Price at the same time.

The key is to balance these three based on your workload patterns. Learn more about how to Beat the GPU CAP Theorem with Bento Inference Platform.

Ecosystem support#

Lastly, check what software ecosystem your GPUs support.

NVIDIA GPUs still dominate for production inference due to their mature driver stack, better kernel fusion, and wide community support.

However, AMD is catching up fast with the ROCm ecosystem and cards like MI300X and MI355X, which already support many PyTorch and Hugging Face models.

If you plan to deploy across vendors, ideally, your inference stack should support both CUDA and ROCm environments to avoid vendor lock-in.

Primary GPU sourcing options#

Now that you understand what drives GPU decisions, let’s look at where you can actually get GPUs.

Hyperscalers#

Major cloud providers offer the fastest way to get started. You can spin up a GPU instance in minutes.

Popular options include AWS G5/G6/P4/P5, Azure NC/ND, and Google Cloud A2/A3 series. They cover a wide range of GPUs from both NVIDIA (e.g., T4, L4, A100, H100, H200) and AMD (e.g., MI250X, MI300X, MI355X).

Pros:

- Overall instant capacity and global availability

- Enterprise-grade security and reliability

- Integrated networking, monitoring, and storage within their broader ecosystem

Cons:

- High cost for inference at scale

- Extra setup required for fast autoscaling for LLM workloads

- Quotas and limited availability for high-end GPUs like H100 and H200

- Vendor lock-in risk

GPU cloud providers#

Specialized GPU clouds, or NeoClouds, focus primarily on AI workloads. They often provide better price/performance and access to a wider variety of GPUs than hyperscalers.

Popular options include CoreWeave, Nebius, Vultr and Lambda.

Pros:

- Lower hourly rates than traditional cloud providers

- Access to inference-optimized GPUs

- Tailored environments for LLM inference

Cons:

- Fewer regions and smaller support teams

- Less mature networking and compliance tooling

- Less control for regulated or enterprise environments

Decentralized GPU marketplaces#

Emerging decentralized networks let you rent compute from distributed GPU owners.

They’re experimental but increasingly popular for cost optimization.

Popular options include Vast.ai, SF Compute, and Salad.

Pros:

- Extremely competitive pricing

- Flexible, short-term rentals

- Good for burst capacity and experimentation

Cons:

- Reliability and SLA vary by provider

- Limited enterprise-grade security and compliance

Direct purchase#

Buying GPUs outright gives you full control over hardware and deployment.

You can source cards directly from NVIDIA and AMD, or through original equipment (OE) partners like Dell, GIGABYTE and HPE.

Pros:

- Full ownership and configuration freedom

- Predictable long-term cost (no hourly billing)

- Ideal for on-prem or air-gapped environments

Cons:

- High upfront investment and longer procurement cycles

- Require in-house operations for cooling, networking, and maintenance

- Difficult to scale quickly when demand spikes

Each sourcing model has its place. The key is to mix and match.

Why running GPUs across multiple regions or providers matters?#

Running LLM inference in one region or on one cloud might seem simpler, until you hit a traffic surge, a GPU shortage, or a compliance issue.

That’s when teams realize why multi-cloud, cross-region or hybrid deployments are essential for scalable, cost-efficient, and reliable LLM inference.

Inference traffic is never predictable#

Training happens in planned, predictable batches.

Inference workloads don’t. It happens with real users in real time, and it’s rarely steady.

A product launch, a marketing campaign, or even a viral post can flood your inference endpoints overnight. Demand can jump from near-idle to GPU-saturation in minutes.

Your compute capacity can max out at the worst possible time. Your carefully planned infrastructure can crumble under the load.

That’s why the smartest AI teams no longer rely on a single cloud or region. They spread their workloads across multiple places to have extra headroom.

When one region fills up, traffic simply reroutes to another. When a provider runs short on GPUs, requests overflow seamlessly elsewhere.

For LLMs that depend on high-end GPUs like NVIDIA H200 or AMD MI355X, this flexibility isn’t optional; it’s survival. These GPUs are expensive, scarce, and often back-ordered for months.

Multi-region setups let you keep serving when your competitor is waiting in line.

Keep data where it belongs#

Regulatory and privacy rules are getting stricter every year.

Why? LLMs increasingly power applications that handle sensitive information, from AI agents to RAG systems.

In sectors like healthcare, finance, and government, enterprises running LLMs on customer or proprietary data must follow data residency laws. They are required to store and process data within specific borders (e.g, the EU’s GDPR).

A single global endpoint can quickly violate those requirements.

By contrast, a multi-cloud, cross-region architecture solves this elegantly:

- Deploy a copy of your model close to the data it uses.

- Serve European users from EU-based GPUs, U.S. users from U.S. regions, and so on.

- Stay compliant and keep latency low.

This keeps data local and compliant, without sacrificing uptime or performance.

Find the cheapest regions to run your LLMs#

GPUs don’t cost the same everywhere, not even close.

Pricing varies by provider, region, and even availability zone.

Take Google Cloud’s a3-highgpu-1g instance (1×H100 GPU):

| Region | Monthly Cost (USD) |

|---|---|

| us-central1 | $8,074.71 |

| europe-west1 | $8,885.00 |

| us-west2 | $9,706.48 |

| asia-southeast1 | $10,427.89 |

| southamerica-east1 | $12,816.56 |

That’s nearly a 60% price gap between the cheapest and most expensive regions.

If you’re locked to one region or cloud, you miss the chance to deploy where GPUs are both available and affordable.

Multi-cloud and cross-region setups let you:

- Route traffic to regions with lower GPU costs

- Shift inference workloads when prices or availability change

- Reduce overall compute spending without compromising performance

Avoid vendor lock-In#

Building your inference stack on one cloud feels convenient, until something breaks or prices spike.

Then you’re stuck.

Vendor lock-in doesn’t just limit portability; it weakens your future negotiating leverage.

If your entire workload runs on one platform, you’re forced to accept their pricing, quotas, and roadmap.

Running inference across multiple clouds removes that single point of dependency.

You can:

- Shift workloads when prices rise

- Keep latency steady when a region throttles supply

- Choose new GPU types as soon as they’re available elsewhere

Think of it as diversifying your compute portfolio. It’s the same way you’d diversify financial assets.

Bento: One inference stack, any GPU#

When done right, multi-cloud, cross-region, or hybrid inference gives you a competitive advantage.

But making it work isn’t easy. For example, you need to:

- Manage GPUs across different providers

- Configure autoscaling for dynamic workloads with fast cold starts

- Maintain unified observability across every environment

Doing all this manually? That’s a full-time job for your engineering team, the time for which should be spent on innovation, not infrastructure.

That’s exactly why we built the Bento Inference Platform.

It provides a unified compute fabric, an orchestration layer that lets enterprises deploy and scale inference workloads across any GPU source, anywhere.

Here’s how it works:

- BYOC (Bring Your Own Cloud): Run inference directly inside your private cloud or VPC, whether it’s AWS, GCP, CoreWeave, Nebius or any other provider.

- Cross-region, multi-cloud, on-prem, or hybrid: Manage and autoscale GPUs seamlessly across data centers, hyperscalers, and GPU clouds — all from one control plane. Bento supports both NVIDIA and AMD GPUs.

- Tailored inference optimizations: Out-of-the-box features like prefill–decode disaggregation, KV-cache offloading, and LLM-aware routing help you maximize throughput and reduce cost per token.

- Fast autoscaling: Scale GPU resources up or down dynamically with scale-to-zero support. When traffic surges, Bento intelligently shifts workloads to the regions or clouds where GPUs are most available and affordable.

- LLM-specific observability: Track the metrics that matter for inference, such as TTFT (Time-to-First-Token) and ITL (Inter-Token Latency), all on a centralized dashboard.

- Python-first workflow: Define, deploy, and ship inference services with just a few lines of Python code.

With Bento, you get one inference stack that works with any GPU, in any region, on any cloud. Let your team focus on shipping faster AI products while Bento handles the infrastructure.

Still have questions?

- Read our LLM Inference Handbook

- Learn about the best open-source LLMs in 2026

- Schedule a call with us to see how Bento fits your inference stack

- Join our Slack community to get the latest information on GPU sourcing

- Sign up for our Bento Inference Platform and start deploying cross-region and multi-cloud LLMs today

FAQs#

What GPUs are best for LLMs?#

For large models, GPUs like NVIDIA H100 and H200, or AMD MI300X and MI355X, offer the ideal balance of compute power, memory bandwidth, and VRAM capacity for inference workloads.

For smaller models, GPUs such as NVIDIA A10, L4, or AMD MI250 provide strong price-to-performance without sacrificing quality.

Always benchmark GPUs with your own LLMs. There’s no single “best” GPU, only the one that fits your specific model size, context length, and performance goals.

Learn more about how to match GPUs with different LLMs in our handbook.

Hyperscalers vs. GPU clouds vs. owned hardware: how do I choose?#

- Hyperscalers (AWS, Azure, GCP): Best for flexibility, global reach, and enterprise security, but most expensive long-term.

- GPU Clouds (CoreWeave, Nebius, Lambda): Focused on AI workloads, offering better price/performance and more GPU variety.

- Owned hardware: Ideal for stable, predictable traffic and strict compliance, but requires upfront investment and ops overhead.

Our AI infrastructure survey showed that a significant 62.1% of respondents run inference across multiple environments. As enterprises scale, they are more likely to succeed with a hybrid strategy, combining cloud elasticity with dedicated or BYOC resources for cost control.

How do I cut inference cost without losing quality?#

Focus on optimization, not just cheaper hardware. Techniques like speculative decoding, prefill–decode disaggregation, and KV-cache offloading can speed up inference and cut cost without degrading quality.

You can also route requests to cheaper regions or use multi-cloud scheduling to secure the best rates automatically.

Platforms like Bento make these optimizations easier. You can apply them out of the box and run LLM inference efficiently across any GPU source.