A Software Engineer’s Guide To Model Serving

Authors

Last Updated

Share

If you’ve been in software for the last 10 years, you’ve likely heard the hype around machine learning and artificial intelligence. Perhaps it’s come up in discussion with one of your coworkers, or maybe you’ve had an existential conversation with your neighbor over a few beers about machines “taking over”. The truth is, ML and AI aren’t going anywhere anytime soon. The applications of ML touch nearly every industry, and today, we’re just scratching the surface of its capabilities and use cases.

As consumers, we’re entering a new era, which means for us software engineers, there’s a lot to learn about ML.

Then, And Now#

Traditionally, the Data Science team at a company had responsibility over the entire lifecycle of an ML model. A common scenario might look like this: Data Scientists would build a model, then run predictions across a static dataset. From there, they might build dashboards for senior executives to use for decision-making.

Today, these decisions need to happen at scale and in real-time. Consider the instantaneous nature of Netflix’s recommendation system for shows and movies, or chatbots that understand and respond to customer questions. Businesses are increasingly realizing that these types of use cases enhance the user experience — and they’re only possible with model serving applications.

Because of this, software engineers are typically the ones who are responsible for deploying these ML models as prediction services alongside other applications. In this article, we’ll explain the fundamental considerations when it comes to model serving and deployment.

What Is Model Serving?#

The term “model serving” is the industry term for exposing a model so that other services can call for a prediction.

There are a couple different types of model serving:

1. Online serving: A model is hosted behind an API endpoint that can be called by other applications. Typically the API itself uses either REST or GRPC.

2. Scheduled batch serving: A service which when called runs inference on a static set of data. Jobs can be scheduled on a recurring basis or on-demand.

We’ll primarily focus on online serving for this article, but know that batch and streaming use cases contain many of the same considerations.

Production model serving use cases have all the requirements of traditional software deployment, with the addition of new requirements, specific to ML. But don’t worry, you’re not going to have to learn a completely new stack. There are a lot of traditional software development concepts you can lean on.

So, where do most engineers start? Presumably, you already have a model that a data scientist has developed. In a nutshell, your responsibility will be to wrap the model in a serving application that will load the model, and then pass inputs to it for predictions. The service will need to be deployed to your organization’s preferred cloud environment, where other applications can call the service and make use of the prediction logic.

Building Your ML Model Serving Application#

Building ML applications can look a lot like building traditional software applications. When building your application, try to choose specialized ML tools if possible. They give you the right primitives to build something quick and simple, but also contain the features necessary to scale as your application grows. A generic framework like Flask is a handy tool, but can fall over quickly because it isn’t purpose built for ML. The following are key criteria to look at when choosing which tools to work with.

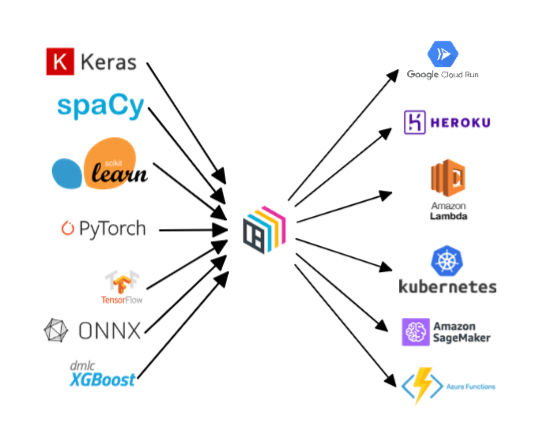

Supporting Popular ML Frameworks#

Data scientists have a lot of options to choose from when it comes to frameworks for training a model. Each framework has its strengths and weaknesses when it comes to training the model itself. For example, some frameworks work better for tabular data, while others work better for unstructured data, like images. As a software engineer, it’s our job to take the trained model from whichever framework was used, and package it into our service. This means we need to ensure it is saved in such a way that it can be loaded again inside our service and make the same predictions as in training.

The issue with model reproducibility is that each framework has nuances that can make reproducibility difficult. It’s important to do your research and make sure that you are saving and loading the model correctly. If you can, choose a model serving tool that has integrations with all of the popular frameworks so that you don’t have to learn all the intricacies of each. With the right integrations, your data scientists are free to use whichever framework they see fit.

Preprocessing And Post-Processing Code#

Data scientists will routinely add logic for transforming inputs and outputs when calling a model for inference. Preprocessing code prepares and cleans the data so that it’s suitable to be passed to the ML model. Post-processing code takes the output of a model and transforms it so that external services can understand the prediction response.

It’s important that this transformation code be part of the service so that predictions are run in a consistent manner. These transformations can get complex, but by using a Python-based serving framework, you easily package the code without having to rewrite it in a different language. Following the OpenAPI standard is helpful so that you can document the service in a standard way and expose the API behind a nice Swagger interface where users can test the endpoint themselves.

Dependency Management#

Dependency management should be a familiar concept — in ML, it can be argued that it’s even more critical to consider because when things break, the results are much more subtle. A normal software application might throw a stack trace and break pretty visibly. For an ML application, the model may still process data and make predictions, but the predictions may be less accurate (and therefore, worse quality) than when it was trained. It’s important to run the model with the same library version as it was trained with. This means recording the exact version which was used in training, and making sure that the dependencies that are downloaded in production are the same.

Versioning#

Code versioning is a very typical software development practice. You may be used to using Github for versioning code, but you may find that it isn’t the best solution for ML models due to their complexity and size. Having a centralized way to version code, models, dependencies and deployment configuration together is important to avoid having any of these components out of sync later during deployment. The solution could be as simple as storing a snapshot of these components in S3. A model repository can be used to keep track of deployments and, if needed, roll back to earlier versions.

Deploying Your Application#

Once you’ve tackled the application-level nuances, you’ll need to think about deploying your service. Many of the development patterns that you’ll read about below should sound familiar, with slight twists for an ML service.

Here are some important considerations:

Containerization#

To ensure reproducibility, we recommend using Docker to isolate your service and run it in a consistent way across environments. Choose a docker image that is well suited for the framework that you are using. For example, don’t use Windows if the ML framework that you’ve trained on does not support Windows.

With most automated software deployments, you’ll need to make sure your code and related resources are copied into the container — meaning, the models you’re using as well as the appropriate library dependencies. In addition, you’ll need to make sure any required native libraries are also provisioned on the image that you’re building. Some ML libraries require some very specific native libraries in order to run inference efficiently.

Where To Deploy#

For most teams who are deploying prediction services for the first time, you probably want to use the cloud provider (or on-premise environment) that you’re already using for other applications. This will allow you to reduce the learning curve and maintenance burden by using existing deployment patterns. Once you’ve chosen the cloud provider, you’ll want to decide which of their deployment patterns best fits your use case.

Let’s take AWS, for example. Below, we’ve shared our assessment of benefits, considerations, and caveats for deploying in AWS.

Deploying In#

Benefits:

- Straightforward and scalable

- You don’t have any servers to manage

Good for:

- Applications with simple models that you want to test out internally

- Applications don’t need to be running all the time

Considerations:

- When you deploy your newly made Docker container to Lambda, make sure that the way you packaged the code fits Lambda’s requirements to call the endpoint

- Default logging uses CloudWatch which can be difficult to use for debugging

- Has a 10Gb limit on the container size

Caveats:

- Does not support GPU acceleration, which can be a dealbreaker for performance on certain types of models

- Cold starts for prediction services can take longer due to loading up the whole model into memory

Deploying In#

Benefits:

- Ability to provision machines that have GPUs

- Purpose built, end to end ML platform that supports a variety of ML specific workflows

Good for:

- Heavy ML use cases that require a lot of compute resources

Considerations:

- Make sure that the code is laid out with the correct endpoints. SageMaker has some very specific requirements in order for the code to run properly

Caveats:

- As with lambda, logging uses cloudwatch out of the box which can be difficult to use

- Debugging can be difficult because you can’t log into the machines

- Monitoring can be a struggle because the primary interface is the AWS console

- If an external service needs to access you’ll need to setup an api gateway for access

Deploying In#

Benefits:

- Flexibility to choose how to run your service (as long as it’s in a container)

- Ability to choose your own hardware

Good for:

- Running a variety of workloads fairly easily

- Running on machines that have GPUs, without the complexity of something like SageMaker

Considerations:

- If you’re already familiar with ECS, this is a really good option. It allows for the flexibility of different hardware and OS options.

- You’ll have to implement your own logging and monitoring solution

Caveats:

- If you don’t want to run with containers this isn’t a good option, but if you’re just starting out and want to gain some experience, this is a great option because it’s about as simple as a container service gets.

Other AWS Deployment Options#

Other options in AWS include a Kubernetes deployment on EKS or a raw EC2 deployment where you can control everything. When choosing your deployment options, consider what you have experience with along with the scale with which you need to run your application. Specialized hardware can be worth a look if you have particular use cases, but as with a traditional software deployment, make sure that the option you choose fits your particular needs.

Infrastructure As Code#

Once you’ve decided on where to deploy your prediction service, it’s useful to use a tool like Terraform to ensure deployment reproducibility and to coordinate rolling deployments.

It also gives you the ability to deploy in many different ways. Lambdas can be provisioned behind API gateways or called directly. Elastic load balancers can be used to scale the service as traffic or CPU usage increases. These decisions will be specific to your company and use cases. It never hurts to start with something simple and then evolve the architecture from there.

Choosing The Right Tools#

Kudos to you if you made it through this entire article! As you can see, there’s a lot to familiarize yourself with when building an ML service.

The good news is, there are tools that handle these concerns out of the box.

That’s right. Here at BentoML, our open source model serving framework addresses these considerations so that you don’t have to have to worry about them. The best part is, you can get started quickly and easily. Got more questions? We invite you to join our growing Slack community of ML practitioners today.